Abstracts

Drolias Garyfallos Chrysovalantis, Tziokas Nikolaos

Building footprint extraction from historic maps utilizing automatic vectorization methods in open source GIS software

University of the Aegean, Greece

Keywords:

automatic vectorization; historical maps; open source GIS; shape recognition

Summary:

Historical maps are a great source of information about city landscapes and the way it changes through time. These analog maps contain rich cartographic information but not in a format allowing analysis and interpretation. A common technique to access this information is the manual digitization of the scanned map but it is a time-consuming process. Automatic digitisation/vectorisation processing generally require expert knowledge in order to fine-tune parameters of the applied shapes recognition techniques and thus are not readily usable for non-expert users. The methodology proposed in this paper offers fast and automated conversion from a scanned image (raster format) to geospatial datasets (vector format) requiring minimal time for building footprint extraction. The vector data extracted quite accurate building footprints from the scanned map, but the need for further post-processing for optimal results, is imminent.

Chenjing Jiao, Magnus Heitzler, Lorenz Hurni

Extracting Wetlands from Swiss Historic Maps with Convolutional Neural Networks

Institute of Cartography and Geoinformation, ETH Zürich, Switzerland

Keywords:

Siegfried map, Fully Convolutional Neural Networks, wetland reconstruction

Summary:

Historical maps contain abundant and valuable cartographic information, such as building footprints,

hydrography, objects of historical relevance, etc. Extracting such cartographic information from the

maps will be to the benefit of multiple applications and research fields. Specifically, extracting

wetland based on historic maps facilitates researchers to investigate the spatio-temporal dynamics

of hydrology and ecology. However, conventional extraction approaches such as Color Image

Segmentation (CIS) are not perfectly suitable for historic maps due to the often poor graphical and

printing quality of the maps. In recent years, studies that employ deep learning methods, especially

Fully Convolutional Neural Networks (FCNN), provide an efficient and effective way to extract

features from raster maps. Yet, FCNNs require a large amount of training data to perform

sufficiently well. However, producing training data manually might not be an option due to a lack

of resources, which motivates the search for alternative sources of training data. In a recent real-

world use case, wetlands for the whole Switzerland for the year 1880 are to be extracted for a Non-

governmental Organization (NGO) from the Swiss Siegfried Map Series (1872 to 1949) to carry

out a study on landcover change. Since data for this year was not available, data from another study

based on the year 1900 has been used for training [1]. Naturally, both datasets come from somewhat

different distributions. The symbolization may have changed slightly between the two periods, and

the map sheets of the training dataset have been georeferenced in a different way from the sheets of

1880. We developed an FCNN-based method to extract wetlands from the maps. Each map sheet is

subdivided into a grid of tiles with the size of 200x200 pixels to be processable by the FCNN.

Furthermore, to avoid misclassifying the border pixels, the extent of the input tile is expanded by

60 pixels on each side. The model used for the wetland segmentation is based on the U-Net

architecture [2] and was modified according to [3]. The U-Net comprises two diametrical network

paths, the contracting path and the up-sampling path. The contracting path consisting of convolution

layers and max-pooling layers generates increasingly higher abstract representations of the input

image. The up-sampling path infers the class of a pixel based on its position and its surroundings

by gradually combining these features on different granularities. Once the segmentation results are

generated, vectorization is performed using functions from the Geospatial Data Abstraction Library

(GDAL) to convert the raster binary results into vector geometries. Generalization is implemented

using the Douglas–Peucker algorithm, which traces the line segments of the original polyline and

only keeps the start point, end point, and the farthest point of the current line segment. The

vectorized and generalized geometries can be manually corrected to yield high-quality wetland

vector layers. With this method, wetlands are extracted and vectorized based on the selected 573

historic map sheets across Switzerland. Future work will not only focus on the wetland extraction,

but also on other hydrological features on the historic maps.

[1] Stuber, M. and Bürgi, M. (2018). Vom "eroberten Land" zum Renaturierungsprojekt.

Geschichte der Feuchtgebiete in der Schweiz seit 1700. Bristol-Schriftenreihe: Vol. 59. Bern: Haupt

Verlag.

[2] Ronneberger, O., Fischer, P., & Brox, T. (2015). U-Net: Convolutional Networks for

Biomedical Image Segmentation, Cham. In International Conference on Medical image computing

and computer-assisted intervention (pp. 234-241). Springer, Cham.

[3] Heitzler, M., & Hurni, L. (Accepted.) Cartographic Reconstruction of Historical Building

Footprints from Historical Maps – A Study on the Swiss Siegfried Map. Tansactions in GIS.

Anna Piechl

(Semi-)automatic vector extraction of administrative borders from historical raster maps

Austrian Academy of Sciences, Vienna

Summary:

Historical raster maps can be a useful source of information and allow us to travel backwards in time.

The HistoGIS Project wants among other things also the creation of geodata from historical

political/administrative units to allow queries afterwards with this data. Therefore, already existing

vector data is used but also scanned historical raster maps from the 19th and early 20th century.

When it comes to the creation of new vector data the use of historical maps is inevitable. But the

creation and extraction of vector data from raster maps can be very challenging. For sure polygons

and attributes can be created manually by heads-up digitizing. Since this process can be very time

intense and tedious, the aim was to find at least semi-automatic solution for extraction and

vectorization of the administrative borders from the old maps. Therefore, different software tools

and processes were tested. Commercial ones, as well as non-commercial ones whereas we have to

say, that in the HistoGIS Project we focus on using open-source software. The main source of the

maps is the David Rumsey Maps Collection which offers a great variety of scanned historical maps in

different image resolutions. During the tests we figured out that it is not possible to use the tools

without any pre-processing which includes image enhancement as well as image segmentation. This

helps e.g. to get rid of noise or reduce or to improve the picture quality and in case of image

segmentation to isolate the features of interest, the administrative borders. As most of the maps are

from different sources, they have different qualities and colours. Hence it is important to consider

these differences while using different maps when it comes to the parameter settings. These findings

of different tools also let us know that because of the variety of the historical maps there is no one-

size-fits-all automated solution for the vectorization process. Although some of the tools show usable

results, other issues come along when using the vectorized geodata such as inaccuracy and

uncertainty. These problems are caused by small scales, strong generalization of the old maps and by

the motivation of the cartographer who did the map.

Daniel Laumer, Hasret Gümgümcü, Magnus Heitzler, Lorenz Hurni

A semi-automatic Label Digitization

Workflow for the Siegfried Map

Institute of Cartography and Geoinformation, ETH Zurich, Switzerland

Keywords:

historical maps, vectorization, deep learning, convolutional neuronal network, label extraction

Summary:

Digitizing historical maps automatically offers a multitude of challenges. This is particularly true for the case of label

extraction since labels vary strongly in shape, size, orientation and type. In addition, characters may overlap with other

features such as roads or hachures, which makes extraction even harder. To tackle this issue, we propose a novel semi-automatic

workflow consisting of a sequence of deep learning and conventional text processing steps in conjunction with

tailor-made correction software. To prove its efficiency, the workflow is being applied to the Siegfried Map Series (1870–1949)

covering entire Switzerland with scales 1:25.000 and 1:50.000.

The workflow consists of the following steps. First, we decide for each pixel if the content is text or background. For this

purpose, we use a convolutional neuronal network with the U-Net architecture which was developed for biomedical image

segmentation. The weights are calculated with four manually annotated map sheets as ground truth. The trained model can

then be used to predict the segmentation on any other map sheet. The results are clustered with DBSCAN to aggregate the

individual pixels to letters and words. This way, each label can be localized and extracted without background. But since this

is still a non-vectorized representation of the labels, we use the Google Vision API to interpret the text of each label and also

search for matching entries in the Swiss Names database by Swisstopo for verification. As for most label extraction

workflows, the last step consists of manually checking all labels and correcting possible mistakes. For this purpose, we

modified the VGG Image Annotator to simplify the selection of the correct entry.

Our framework reduces the time consumption of digitizing labels drastically by a factor of around 5. The fully automatic part

(segmentation, interpretation, matching) takes around 5-10 min per sheet and the manual processing part around 1.5–2h.

Compared to a fully manual digitizing process, time efficiency is not the only benefit. Also the chance of missing labels

decreases strongly. A human cannot detect labels with the same accuracy as a computer algorithm.

Most problems leading to more manual work occur during clustering and text recognition with the Google Vision API. Since

the model is trained for maps in a flat part of German-speaking Switzerland, the algorithm performs poorer for other parts. In

Alpine regions, the rock hachures are often misinterpreted as labels, leading to many false positives. French labels are often

composed of several words, which are not clustered into one label by DBSCAN. Possible further work could include

retraining with more diverse ground truth or extending the U-Net model so that it can also recognize and learn textual

information.

Alexandre Nobajas

Targeted crowdsourced vectorisation of historical cartography

School of Geography, Geology and the Environment, Keele University, United Kingdom

Keywords:

crowdsourcing, gamification, systematic vectorisation, training set creation

Summary:

Many institutions have systematically digitised their cartographic documents, so there are now

millions of maps which have been digitised and can be accessed on-line. The next step many

map libraries have undertaken has been to georeference their digitised maps, so they can now be

used within modern digital cartographic dataset. However, many of these applications still use

the raster version of the maps, so they hamper the full potential working with historical

cartography has, as it limits the analytical capacity and impedes performing spatial analysis.

Even though there have been timid steps to overcome this limitation, the discipline seems to be

struggling to advance into the next step, the systematic vectorisation of historical maps.

Vectorisation is the process of converting pixel-based images –raster- into node or point based

images –vector, which can then be queried or analysed by individual components. Vector based

maps have a series of advantages when compared to raster maps, as they allow scale changes

without a loss in detail, classifying map features by type or performing spatial queries just to

mention a few. Therefore, by vectorising a historical map we are providing an unprecedented

level of usability to it and allowing detailed inquiries to the information contained into it.

Although there have already been some successful experiences in vectorising historical maps,

they have been either expensive or not systematic, so there is scope to build upon these

successful experiences and achieve a widespread method of vectorisation which can be applied

to map collections across the world.

There are different approaches to overcome this technological and methodological hurdle, most

of them linked to the use of automation techniques such as using remote sensing land

classification methods or, more recently, the use of machine learning and artificial intelligence

techniques. However, a large number of training sets are necessary in order to train the

automation systems and to benchmark the outputs, so large datasets are still needed to be

generated. The use of crowdsourcing methods has been proven as a successful means of

achieving the same goal, as experiences such as the ones developed by the New York Public

Library demonstrate. If given the possibility and the right tools, people will help to vectorise

historic documents, although Nielsen’s 90-9-1 rule will apply, meaning that other ways of

increasing participation are necessary in order to speed the vectorisation up.

This paper proposes a framework in which by using a variety of gamification methods and by

targeting two different groups of people, retirees and school children, historic maps can be

massively vectorised. If fully deployed, this system would not only produce a wealth of

vectorised maps, but it would also be useful in teaching school children important disciplines

such as cartography, geography and history while at the same helping older generations keeping

them mentally active. Finally, an intergenerational dialogue that would enrich both participant

groups would be possible thanks to their common participation in the vectorisation of historical

documents.

Jonas Luft

Automatic Georeferencing of Historical Maps by Geocoding

CityScienceLab, Hafencity University, Hamburg

Summary:

Libraries and researchers face a big challenge: sometimes thousands, millions of

maps are conserved in archives to retard deterioration, but this makes it hard to

obtain specific knowledge of their content, sometimes even of their existence. In

recent years, the efforts have increased to digitise historical documents and

maps to preserve them digitally, make them accessible and allow researchers

and the general public a less restricted access to their heritage.

But maps are more than a cultural artifact: they are data. Data about the past

that can very well be important for science and decisions today. The problem is,

the vast amount alone makes it hard to know what to look for. Maps are usually

archived and catalogued with limited meta-information, sometimes obscuring

their actual content. This makes it impossible to find specific information without

expert knowledge on history and cartography.

Extracting the content, i.e. data, is a necessity to make the map content

searchable and to use modern digital tools for automatic processing and

analyses. For combining data from different maps and comparing them with

modern geospatial data, georeferencing is paramount. This is usually done by

hand and needs a lot of time and specialised training.

We explore if and how we can automatically find usable GCP in historical maps

with computer vision and document analysis methods of today. In this work-in-

progress report, we use OCR on map labels and geocoding of persisting

geographical feature designation for successful first experiments. This shows

text recognition and vectorisation to be a promising research direction for large-

scale automated georeferencing of historical maps.

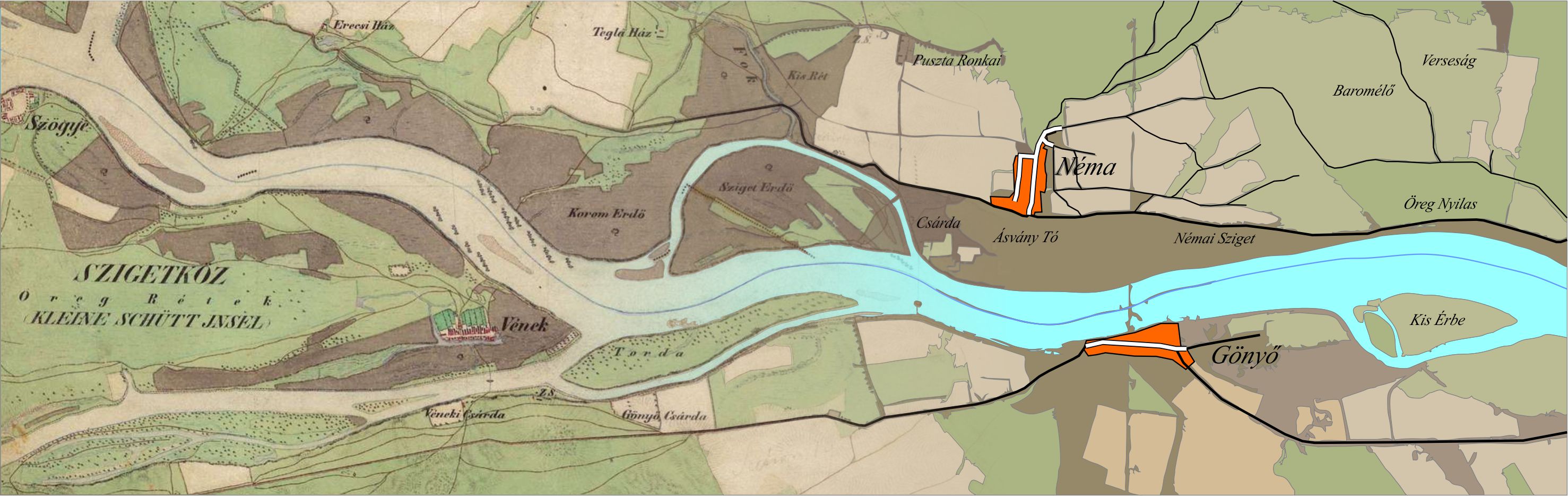

Mátyás Gede, Valentin Árvai, Gergely Vassányi, Zsófia Supka, Enikő Szabó, Anna Bordács, Csaba Gergely Varga, Krisztina Irás

Automatic vectorisation of old maps using QGIS – tools, possibilities and challenges

Department of Cartography and Geoinformatics, ELTE Eötvös Loránd University, Budapest

Keywords:

map vectorisation, QGIS, Python

Summary:

The authors experimented with the open source GIS software QGIS to reveal its possibilities

in automatic vectorisation. The target object was a 1 : 200 000 map sheet of the third Austro-

Hungarian military survey. Although QGIS provides tools for the main steps of the

vectorisation process – such as colour segmentation of the raster image, skeletoning, vector

extraction and vector post-processing – using them on an old map raises several challenges.

This paper discusses the results of these experiments, and introduces a few simple python

scripts that may help users in the vectorisation process.

Geoff Groom, Gregor Levin, Stig Svenningsen, Mads Linnet Perner

Historical Maps – Machine learning helps us over the map vectorisation crux

Department of Bioscience, Aarhus University, Denmark; Department of Environment Science, Aarhus University, Denmark; Special Collections, Royal Danish Library, Copenhagen, Denmark

Keywords:

Denmark, topographic maps, land category, OBIA, CNN, map symbols

Summary:

Modern geography is massively digital with respect to both map data production and map data analysis. When we consider historical maps, as a key resource for historical geography studies, the situation is different. There are many historical maps available as hardcopy, some of which are scanned to raster data. However, relatively few historical maps are truly digital, as machine-readable geo-data layers. The Danish “Høje Målebordsblade” (HMB) map set, comprising approximately 900 sheets, national coverage (i.e. Denmark 1864-1899), and geometrically correct, topographic, 1:20,000, surveyed between 1870 and 1899, is a case in point. Having the HMB maps as vector geo-data has a high priority for Danish historical landscape, environmental and cultural studies. We present progress made, during 2019, in forming vector geo-data of key land categories (water, meadow, forest, heath, sand dune) from the scanned HMB printed map sheets. The focus here is on the role in that work of machine learning methods, specifically the deep learning tool convolutional neural networks (CNN) to map occurrences of specific map symbols associated with the target land categories. Demonstration is made of how machine learning is applied in conjunction with pixel and object based analyses, and not merely in isolation. Thereby, the strengths of machine learning are utilised, and the weaknesses of the applied machine learning are acknowledged and worked with. Symbols detected by machine learning serve as guidance for appropriate values to apply in pixel based image data thresholding, and for control of false positive mapped objects and false negative errors. The resulting map products for two study areas (450 and 300 km2) have overall false-positive and false-negative levels of around 10% for all target categories. The ability to utilise the cartographic symbols of the HMB maps enabled production of higher quality vector geo-data of the target categories than would otherwise have been possible. That these methods are in this work developed and applied via a commercial software (Trimble eCognition©) recognizes the significance of a tried-and-tested and easy-to-use, graphical-user-interface and a fast, versatile processing architecture for development of new, complex digital solutions. The components of the resulting workflow are, in principle, alternatively usable via various free and open source software environments.

Marguerite le Riche

Identifying building footprints in historic map data using OpenCV and PostGIS

Registers of Scotland

Summary:

The House Age Project (pilot) is a Scottish public sector collaboration between the Registers of

Scotland, Historic Environment Scotland and the National Library of Scotland. The aim of the project

is to develop a methodology to (automatically) extract high quality building footprints from a time-

series of scanned historic maps; and to reconstruct life-cycle information for individual buildings by

identifying and tracking them between datasets.

In order to achieve these ambitious aims, a number of research problems are being investigated

using combined GIS, computer vision and entry-level machine learning approaches. The tools used

to date are open source and include python, in particular the computer vision library OpenCV,

Geospatial Data Abstraction Library (GDAL) algorithms, PostgreSQL and its spatial extension PostGIS

and QGIS.

The source data are georeferenced, 600 dpi scans of the Ordnance Survey’s 25-inch-to-the-mile

County Series map sheets for the historic Scottish county of Edinburghshire, provided by the

National Library of Scotland. The maps were produced between the mid 1800’s and mid 1900’s at a

scale of 1:2500 and include detailed information about the built environment at the time of each

survey.

This paper describes a GIS-oriented approach, whereby the raster images are transformed into

georeferenced PostGIS geometries (via OpenCV contours) early in the analytical process, after an

initial image preprocessing and segmentation has been performed using OpenCV. The identification

of buildings, joining of sheets and post-processing is then undertaken using Structured Query

Language (SQL) queries passed to the database using the python psycopg2 module. This approach

makes attaching metadata to individual buildings easy and helps to overcome or partially addresses

some of the challenges in extracting precise building footprints from the source data.

For example, using Structured Query Language (SQL) allows great flexibility in creating new

attributes for and establishing spatial relationships between selected geometries (created from

OpenCV contours) in the same or adjoining map sheets; this makes it possible to obtain high quality

building polygons despite significant variations in print quality; and implementations of the OGC

Simple Features Standard makes it possible to automatically resolve some of the unwanted text and

artefacts created during skeletonisation.

The limitations of the approach is described. For example the geometries created from the OpenCV

contours are highly complex, which can cause unexpected results.

Problems that have resisted a fully automated solution using the GIS approach (or an OpenCV

approach) will be listed. These problems include effects caused by some of the original cartographic

choices, e.g. joining up buildings that are bisected by overprinted, joined-up text, reconstructing

walls that are indicated by dashed lines; as well as issues caused by the age of the original paper

map sheets, e.g damage and warping at sheet edges.

To date, it has not been possible to develop a fully automated extraction process due to the

resistant problems described above, but a semi-automated process has yielded preliminary building

footprints for the study area for the 3 rd and 4 th edition maps, with the 5 th edition to follow.

Kenzo Milleville, Steven Verstockt, Nico Van de Weghe

Improving Toponym Recognition Accuracy of Historical Topographic Maps

IDLab, Ghent University - Imec, Ghent, Belgium; CartoGIS, Ghent University, Ghent, Belgium

Keywords:

automatic vectorization; historical maps; open source GIS; shape recognition

Summary:

Scanned historical topographic maps contain valuable

geographical information. Often, these maps are the only reliable source of

data for a given period. Many scientific institutions have large collections of

digitized historical maps, typically only annotated with a title (general

location), a date, and a small description. This allows researchers to search

for maps of some locations, but it gives almost no information about what is

depicted on the map itself. To extract useful information from these maps,

they still need to be analyzed manually, which can be very tedious. Current

commercial and open-source text recognition systems fail to perform

consistently when applied to maps, especially on densely annotated regions.

Therefore, this work presents an automatic map processing approach

focusing mainly on detecting the mentioned toponyms and georeferencing

the map. Commercial and open-source tools were used as building blocks,

to provide scalability and accessibility. As lower-quality scans generally

decrease the performance of text recognition tools, the impact of both the

scan and compression quality was studied. Moreover, because most maps

were too large to process as a whole with state-of-the-art recognition tools,

a tiling approach was used. The tile and overlap size affect recognition

performance, therefore a study was conducted to determine the optimal

parameters.

First, the map boundaries were detected with computer vision techniques.

Afterward, the coordinates surrounding the map were extracted using a

commercial OCR system. After projecting the coordinates to the WGS84

coordinate system, the maps were georeferenced. Next, the map was split

into overlapping tiles, and text recognition was performed. A small region

of interest was determined for each detected text label, based on its relative

position. This region limited the potential toponym matches given by

publicly available gazetteers. Multiple gazetteers were combined to find

additional candidates for each text label. Optimal toponym matches were

selected with string similarity metrics. Furthermore, the relative positions

of the detected text and the actual locations of the matched toponyms were

used to filter out additional false positives. Finally, the approach was

validated on a selection of 1:25000 topographic maps of Belgium from

1975-1992. By automatically georeferencing the map and recognizing the

mentioned place names, the content and location of each map can now be

queried.

Előd Biszak, Gábor Timár

First vector components in the MAPIRE: 3D building modelling and Virtual Reality view

Arcanum Database Ltd., Budapest, Hungary; Dept. of Geophysics and Space Science, ELTE Eötvös Loránd University, Budapest, Hungary

Summary:

MAPIRE is an originally raster-based portal, presenting scanned and geo-referred historical

topographic, cadastral and town maps, as overlays on modern cartographic datasets, such as

OpenStreetMaps or HereMaps. Its first 3D option was adopted via the SRTM elevation dataset, still

on raster base, showing 3D panoramas from the viewpoint, using the historical map layer. Here we

introduce the first vector component of the MAPIRE, developed in Cesium software environment. To

improve the city and town maps, we applied a new 3D building modelling utility (MapBox Vector

Tiles). The details of the historical buildings – varying in different time layers – are reconstructed

from archive plans as well as photographic heritage provided by the Arcanum (the host of MAPIRE)

and historical postcards. Unrevealed building parts are defined according to the ‘usual outline’ of the

given age. Using this technology, we are able to provide virtual 3D tours for the users of MAPIRE, first

in the frame of the ‘Budapest Time Machine’ project. Besides, the Virtual Reality (VR) tour is available

also in the service, still in raster basis: as a pilot project, we can follow pre-recorded paths on the

historical map using VR glasses.